One-on-Ones on a Pair Programming Team

One-on-Ones are a well known management strategy. They help reduce communication misses, keep everyone on course and provide an easy platform for feedback. I’ve done them throughout my management career, but the past few years I had a few start and stops with them.

My scenario over the past few years has been working as an engineer and often a tech lead on small teams where we paired as much as 80%. Sitting side by side and rotating pairs often led me to experiment with skipping out on one on ones. If you’re having regular conversations over the code, do one-on-ones serve enough of a purpose?

I decided they were important enough to restart after my first year on the new job. Part of my reluctance was the need to come up to speed on a number of technologies. I skimped on spending time for tactical management tasks. I relished staying deep in the code and design, but I should still have carved out the time for one-on-ones.

When I transitioned to leading a new team about 6 months ago I again let the one-on-ones slip off my radar. I told myself I would restart them after I felt out the new team. Turned out I got lazy and took 6 months to restart them. Even on teams that pair and sit in close proximity, some conversations never come up and it’s rare to discuss items like career aspirations when the whole team is housed at one long table.

I have made a single adjustment from my old style where I ran 30 minute one-on-ones once a week. For my current team:

- Scheduled for 30 minutes.

- Most of the agenda is up to the employee, and sometimes we discuss future career type goals.

- The last 5-10 minutes are for me, news I need to pass on or lightweight feedback.

- Generally the one-on-ones average about 15 minutes, but they’re still scheduled for the full 30.

- I rotate through all of them one after the other so with 3 we’re often done after about an hour.

- If we miss a week for some reason it’s not a big deal, since these are weekly.

Experimenting With External Blog Pressure

Over the past several years my blog has followed the path of many others gradually following into an irregular posting schedule until there were no more than a few posts per year. I always felt guilty, but not enough to really put true effort into restarting it. I came across a pots on SimpleProgrammer.com offering a short email driven program to startup/restart a blog and I went ahead and signed up. Sometimes a bit of external pressure is just enough to restart a habit. So this is the third new post in the last 2 weeks, and I’m attempting to post every Monday now. Can’t complain that it hasn’t worked so far and feel free to blast me if I start falling short.

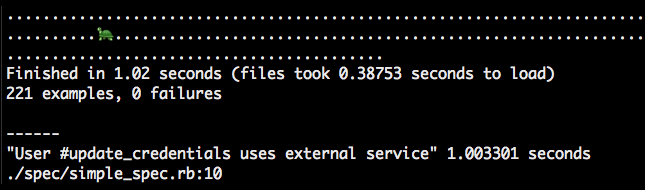

New Gem: Yertle Formatter

I launched my first Ruby gem a few days ago, yertle_formatter. It’s a custom RSpec 3 formatter that prints out turtles for slow specs and then lists them in order of slowest specs.

I worked out a lot of experiments with:

With 2015 ahead I may be working on some new gems soon. As a first gem goes, writing a formatter was a nice way to get started and published.

RSpec Stubs with no_args

We’ve been getting pretty particular about our stub/mock expectations at work. A few months ago I would been perfectly happy with:

TwitterGateway.stub(:new).and_return(double)I didn’t worry about specifying that I didn’t pass any arguments to the constructor. After it was pointed out that the stub didn’t really fully specify its’ expectations I changed to this style:

TwitterGateway.stub(:new).with().and_return(double)Then a colleague pointed out a nice bit of syntactic sugar. You can simply specify a no_args matcher if no arguments are passed in:

TwitterGateway.stub(:new).with(no_args).and_return(double)A more complete stub with better readability. Reminds me of how I fell in love with RSpec the first time I saw it back in 2006.

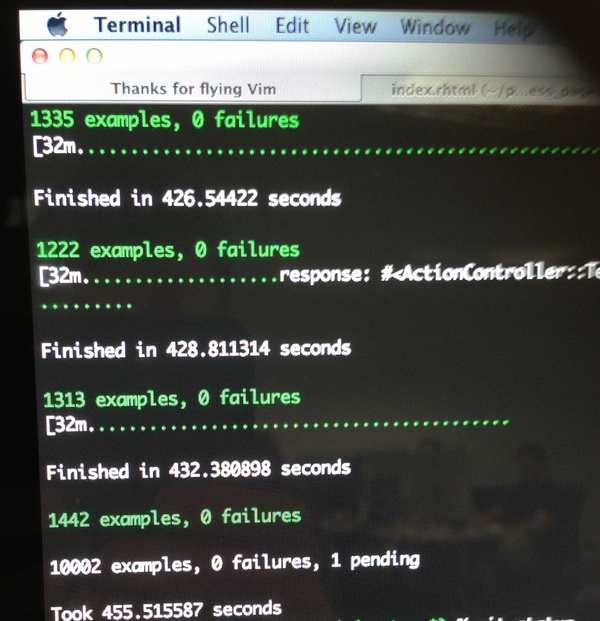

10,000 Tests and Counting

I played a “Yeah” sound effect in campfire a few weeks ago in celebration of checking in our 10,000th test. It was a milestone worth celebrating with both Creme Brûlée Bread Pudding and a chocolate chip cookie. Stepping back a few years I had to fight policy battles just to allot any development time to testing or even check it into CVS with the production code.

Some good things about 10,000 tests and counting:

- We have pretty good confidence that we can catch breaking changes throughout the app. CI and a suite of much slower QA Acceptance tests add to that confidence.

- We can run the entire suite of 10,000 RSpec examples in about 8 minutes on the newest Macbook Pros with 16GB of RAM and 4 cores plus hyper threading.

- Finding old crufty areas of the codebase that aren’t’ tested is a rare surprise rather than a common experience.

- Even our large “god” classes are generally well tested.

- We’re constantly thinking about ways to increase the speed of the overall run to at least keep it under the 10 minute threshold rule of thumb. This tends to lead to good refactoring efforts to decouple slow tests from their slow dependencies.

Some not so good things:

- Many of the ‘unit’ tests are really light integration tests since they depend on database objects, Rails ActiveRecord objects in our case.

- Some of our ‘god’ classes have 3000+ lines of tests and take 2-3 minutes to run on their own.

- We have to rely on methods like parallel tests to distribute our unit test running.

- If it doesn’t look like a change will impact anything outside the new code we sometimes skip running a full spec and let the CI server catch issues.

- Running individual specs that use ActiveRecord often take 5-8 seconds to spin up, which is painfully long for a fast TDD cycle.

- Our full acceptance test suite still isn’t consistent enough and running on CI so we have even more of a dependency of trusting the indirect integration testing in our unit test suite.

- We’d like to use things like guard or auto test, but we haven’t been able to make them work with such a large number of tests.

Even with all the cons of a really large test suite, I love that we have it and run it all day long.